Collaborative Filtering for Movie Recommendations

Author: Siddhartha Banerjee

Date created: 2020/05/24

Last modified: 2020/05/24

Description: Recommending movies using a model trained on Movielens dataset.

- Keras

- Colab

- Github

Introduction

Movielens dataset을 활용하여 협업 필터링을 적용하여 사용자에게 영화를 추천하는 방법에 대해 알아봅시다. MovieLens 영화 평점 데이터 세트는 사용자가 영화에 부여한 평점을 담고 있습니다. 우리의 목표는 사용자가 아직 보지 않은 영화의 평점을 예측하는 것입니다. 이후, 가장 높은 예상 평점을 가진 영화를 사용자에게 추천 할 수 있습니다.

This example demonstrates Collaborative filtering using the Movielens dataset to recommend movies to users. The MovieLens ratings dataset lists the ratings given by a set of users to a set of movies. Our goal is to be able to predict ratings for movies a user has not yet watched. The movies with the highest predicted ratings can then be recommended to the user.

모델의 단계는 다음과 같습니다.

1. 임베딩 매트릭스를 통해 유저 ID를 "유저 벡터"에 매핑

2. 임베딩 매트릭스를 통해 영화 ID를 "영화 벡터"에 매핑

3. 유저 벡터와 영화 벡터 간의 내적을 계산하여 유저와 영화 간의 일치 점수를 얻습니다 (예측 평점).

4. 알려진 모든 유저-영화 쌍을 사용하여 경사하강법을 통해 임베딩을 훈련합니다.

The steps in the model are as follows:

1. Map user ID to a "user vector" via an embedding matrix

2. Map movie ID to a "movie vector" via an embedding matrix

3. Compute the dot product between the user vector and movie vector, to obtain the a match score between the user and the movie (predicted rating).

4. Train the embeddings via gradient descent using all known user-movie pairs.

References:

Setup

import pandas as pd

import numpy as np

from zipfile import ZipFile

import tensorflow as tf

from tensorflow import keras

from tensorflow.keras import layers

from pathlib import Path

import matplotlib.pyplot as plt

데이터 로드

# First, load the data and apply preprocessing

# Download the actual data from http://files.grouplens.org/datasets/movielens/ml-latest-small.zip"

# Use the ratings.csv file

movielens_data_file_url = (

"http://files.grouplens.org/datasets/movielens/ml-latest-small.zip"

)

movielens_zipped_file = keras.utils.get_file(

"ml-latest-small.zip", movielens_data_file_url, extract=False

)

keras_datasets_path = Path(movielens_zipped_file).parents[0]

movielens_dir = keras_datasets_path / "ml-latest-small"

# Only extract the data the first time the script is run.

if not movielens_dir.exists():

with ZipFile(movielens_zipped_file, "r") as zip:

# Extract files

print("Extracting all the files now...")

zip.extractall(path=keras_datasets_path)

print("Done!")

ratings_file = movielens_dir / "ratings.csv"

df = pd.read_csv(ratings_file)

데이터 전처리

첫째, 유저와 영화를 integer 인덱스로 변환하기 위해 몇 가지 전처리를 수행해야합 니다.

First, need to perform some preprocessing to encode users and movies as integer indices.

# 유저 ID의 unique 값을 list로 변환

user_ids = df["userId"].unique().tolist()

# 값을 key로, 인덱스를 value로 하는 딕셔너리 생성

user2user_encoded = {x: i for i, x in enumerate(user_ids)}

# 값을 value로, 인덱스를 key로 하는 딕셔너리 생성

userencoded2user = {i: x for i, x in enumerate(user_ids)}

# 위와 같음

movie_ids = df["movieId"].unique().tolist()

movie2movie_encoded = {x: i for i, x in enumerate(movie_ids)}

movie_encoded2movie = {i: x for i, x in enumerate(movie_ids)}

# 유저 id 및 영화를 숫자로 변경

df["user"] = df["userId"].map(user2user_encoded)

df["movie"] = df["movieId"].map(movie2movie_encoded)

num_users = len(user2user_encoded)

num_movies = len(movie_encoded2movie)

df["rating"] = df["rating"].values.astype(np.float32)

# min and max ratings will be used to normalize the ratings later

min_rating = min(df["rating"])

max_rating = max(df["rating"])

print(

"Number of users: {}, Number of Movies: {}, Min rating: {}, Max rating: {}".format(

num_users, num_movies, min_rating, max_rating

)

)

# Number of users: 610, Number of Movies: 9724, Min rating: 0.5, Max rating: 5.0

training set 및 validation set 만들기

# Prepare training and validation data

# df.sample 은 랜덤 추출 메서드입니다. frac은 비율인데 frac=1이므로 모든 데이터가 랜덤하게 추출됩니다.

# 즉, 모든 데이터를 랜덤하게 섞겠다는 뜻입니다.

df = df.sample(frac=1, random_state=42)

x = df[["user", "movie"]].values

# 정규화하기. the targets between 0 and 1. Makes it easy to train.

y = df["rating"].apply(lambda x: (x - min_rating) / (max_rating - min_rating)).values

# 90%를 training set으로, 10%를 validation set으로 만들기

# Assuming training on 90% of the data and validating on 10%.

train_indices = int(0.9 * df.shape[0])

x_train, x_val, y_train, y_val = (

x[:train_indices],

x[train_indices:],

y[:train_indices],

y[train_indices:],

)

모델 만들기 (Create the model)

우리는 유저와 영화 데이터를 50차원으로 embed 할 것입니다.

모델은 유저와 영화 임베딩간 내적 그리고 각각의 bias를 통해 유저-영화간 매치 점수를 계산할 것입니다. 매치 점수는 시그모이드를 통해 0과 1사이의 값으로 조절될 것입니다. 앞서 우리가 평점 데이터를 normalize했기 때문입니다.

We embed both users and movies in to 50-dimensional vectors.

The model computes a match score between user and movie embeddings via a dot product, and adds a per-movie and per-user bias. The match score is scaled to the [0, 1] interval via a sigmoid (since our ratings are normalized to this range).

EMBEDDING_SIZE = 50

class RecommenderNet(keras.Model):

def __init__(self, num_users, num_movies, embedding_size, **kwargs):

super(RecommenderNet, self).__init__(**kwargs)

self.num_users = num_users

self.num_movies = num_movies

self.embedding_size = embedding_size

self.user_embedding = layers.Embedding(

num_users,

embedding_size,

embeddings_initializer="he_normal",

embeddings_regularizer=keras.regularizers.l2(1e-6),

)

self.user_bias = layers.Embedding(num_users, 1)

self.movie_embedding = layers.Embedding(

num_movies,

embedding_size,

embeddings_initializer="he_normal",

embeddings_regularizer=keras.regularizers.l2(1e-6),

)

self.movie_bias = layers.Embedding(num_movies, 1)

def call(self, inputs):

user_vector = self.user_embedding(inputs[:, 0])

user_bias = self.user_bias(inputs[:, 0])

movie_vector = self.movie_embedding(inputs[:, 1])

movie_bias = self.movie_bias(inputs[:, 1])

dot_user_movie = tf.tensordot(user_vector, movie_vector, 2)

# Add all the components (including bias)

x = dot_user_movie + user_bias + movie_bias

# The sigmoid activation forces the rating to between 0 and 1

return tf.nn.sigmoid(x)

model = RecommenderNet(num_users, num_movies, EMBEDDING_SIZE)

model.compile(

loss=tf.keras.losses.BinaryCrossentropy(), optimizer=keras.optimizers.Adam(lr=0.001)

)

Train the model based on the data split

history = model.fit(

x=x_train,

y=y_train,

batch_size=64,

epochs=5,

verbose=1,

validation_data=(x_val, y_val),

)

# Epoch 1/5

# 1418/1418 [==============================] - 6s 4ms/step - loss: 0.6368 - val_loss: 0.6206

# Epoch 2/5

# 1418/1418 [==============================] - 7s 5ms/step - loss: 0.6131 - val_loss: 0.6176

# Epoch 3/5

# 1418/1418 [==============================] - 6s 4ms/step - loss: 0.6083 - val_loss: 0.6146

# Epoch 4/5

# # 1418/1418 [==============================] - 6s 4ms/step - loss: 0.6072 - val_loss: 0.6131

# Epoch 5/5

# 1418/1418 [==============================] - 6s 4ms/step - loss: 0.6075 - val_loss: 0.6150

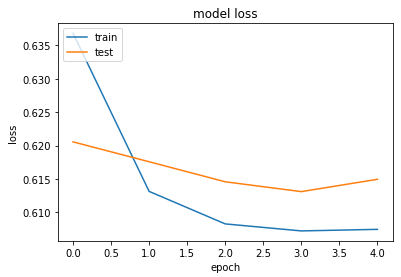

training and validation loss 시각화

plt.plot(history.history["loss"])

plt.plot(history.history["val_loss"])

plt.title("model loss")

plt.ylabel("loss")

plt.xlabel("epoch")

plt.legend(["train", "test"], loc="upper left")

plt.show()

예상 평점 상위 10개 영화 확인해보기

# Show top 10 movie recommendations to a user

movie_df = pd.read_csv(movielens_dir / "movies.csv")

# Let us get a user and see the top recommendations.

user_id = df.userId.sample(1).iloc[0]

movies_watched_by_user = df[df.userId == user_id]

movies_not_watched = movie_df[

~movie_df["movieId"].isin(movies_watched_by_user.movieId.values)

]["movieId"]

movies_not_watched = list(

set(movies_not_watched).intersection(set(movie2movie_encoded.keys()))

)

movies_not_watched = [[movie2movie_encoded.get(x)] for x in movies_not_watched]

user_encoder = user2user_encoded.get(user_id)

user_movie_array = np.hstack(

([[user_encoder]] * len(movies_not_watched), movies_not_watched)

)

ratings = model.predict(user_movie_array).flatten()

top_ratings_indices = ratings.argsort()[-10:][::-1]

recommended_movie_ids = [

movie_encoded2movie.get(movies_not_watched[x][0]) for x in top_ratings_indices

]

print("Showing recommendations for user: {}".format(user_id))

print("====" * 9)

print("Movies with high ratings from user")

print("----" * 8)

top_movies_user = (

movies_watched_by_user.sort_values(by="rating", ascending=False)

.head(5)

.movieId.values

)

movie_df_rows = movie_df[movie_df["movieId"].isin(top_movies_user)]

for row in movie_df_rows.itertuples():

print(row.title, ":", row.genres)

print("----" * 8)

print("Top 10 movie recommendations")

print("----" * 8)

recommended_movies = movie_df[movie_df["movieId"].isin(recommended_movie_ids)]

for row in recommended_movies.itertuples():

print(row.title, ":", row.genres)

# Showing recommendations for user: 474

# ====================================

# Movies with high ratings from user

# --------------------------------

# Fugitive, The (1993) : Thriller

# Remains of the Day, The (1993) : Drama|Romance

# West Side Story (1961) : Drama|Musical|Romance

# X2: X-Men United (2003) : Action|Adventure|Sci-Fi|Thriller

# Spider-Man 2 (2004) : Action|Adventure|Sci-Fi|IMAX

# --------------------------------

# Top 10 movie recommendations

# --------------------------------

# Dazed and Confused (1993) : Comedy

# Ghost in the Shell (Kôkaku kidôtai) (1995) : Animation|Sci-Fi

# Drugstore Cowboy (1989) : Crime|Drama

# Road Warrior, The (Mad Max 2) (1981) : Action|Adventure|Sci-Fi|Thriller

# Dark Knight, The (2008) : Action|Crime|Drama|IMAX

# Inglourious Basterds (2009) : Action|Drama|War

# Up (2009) : Adventure|Animation|Children|Drama

# Dark Knight Rises, The (2012) : Action|Adventure|Crime|IMAX

# Star Wars: Episode VII - The Force Awakens (2015) : Action|Adventure|Fantasy|Sci-Fi|IMAX

# Thor: Ragnarok (2017) : Action|Adventure|Sci-Fi

'Tensorflow' 카테고리의 다른 글

| triplet loss를 활용한 이미지 유사도 측정 (1) | 2021.10.07 |

|---|---|

| tensorflow 에러 해결: Could not load 'cudart64_110.dll'; dlerror: cudart64_110.dll not found (0) | 2021.02.09 |

| IMDB에 Bidirectional LSTM 모델 적용하기 (0) | 2020.12.17 |

| 케라스: 시계열을 활용한 기상(weather) 예측 (2) | 2020.12.16 |

| Tensorflow 콜백함수: ReduceLROnPlateau (2) | 2020.11.18 |